Captioning Hypnotoad—A Quest That Helped Define a Field: An Interview with Sean Zdenek

Future Directions

[gz] On your site, you share a few examples of kinetic captions. Could you talk a bit more about your interest in them?

[SZ] Over the years, captioning style hasn't changed much, despite enormous advances in entertainment and Internet technologies (see Udo & Fels, 2010). Letterforms tend to be uninspiring and aesthetically lifeless. Captions are typically dumped onto the screen in bottom-centered alignment. Style guidelines have remained static. Quality tends to be reduced to a set of decontextualized rules. For example, all caps styling continues to be a popular option for prerecorded content despite strong arguments for mixed-case captions (Udo & Fels, 2010). While the best interfaces present users with multiple options for configuring the display of captions on their screens (typeface, type size, color, contrast, etc.), we need to ask whether the visual design of captions can be improved further, particularly on the production side.

We also need to ask whether captions can be more tightly integrated into the narratives they are designed to support. One familiar critique is that captioning itself is not tightly folded into the creative process. It's typically an add-on at best and afterthought at worst. It does not serve narratives but legal mandates. Technical limitations constrain captioning practices. Producers do not check up on captioners to see if their visions have been channeled accurately or appropriately, which, as I have already mentioned elsewhere in this interview, provides professional captioners with enormous freedom. But that freedom is not grounded by a close collaboration with the creative team.

So I've been thinking lately about what it might mean to bake captions into the narrative, to integrate them more fully and more naturally, to blend form and content in innovative ways. Can we draw upon the resources of visual design—placement, color, typography, size, even simple animations—to produce captions that visually fit the film's overall design aesthetic? Can content and meaning be reinforced through form and design? After watching Night Watch on DVD in Fall 2015, I became more serious about putting some of my ideas into action. Night Watch is a Russian horror film by noted director Timur Bekmambetov (2006), who "insisted on subtitling it and took charge of the design process himself," as opposed to having the Russian speech dubbed into English or leaving the subtitling process to an outside company (Rawsthorn, 2007). He adopted an innovative approach: "We thought of the subtitles as another character in the film, another way to tell the story" (Rosenberg, 2007). The English subtitles in Night Watch are striking, innovative, and beautiful. They acquire dimensionality by moving into the scene itself to become another object that can be affected by the objects around them. They also reinforce meaning through typographic effects and animations. In one of the most discussed examples, the subtitles dissolve away like blood, thus reinforcing the vampiric overtones in the movie.

A small number of studies have explored the potential for closed captioning to animate meaning and create a richer, more accessible experience. In these studies, captions begin to come alive with movement, effects, dimensionality, color, and even avatars to accompany speaker identifiers (see Rashid, Aitken, & Fels, 2006; Rashid, Vy, Hunt, & Fels, 2008; Strapparava, Valitutti, & Stock, 2007; Vy, 2012; Vy & Fels, 2009; Vy, Mori, Fourney, & Fels, 2008). Animated captions offer an alternative in which the dynamic presentation of meaning—a fusion of form and content—can potentially enhance the experience without either sacrificing clarity or giving way to over-produced and over-designed caption tracks that intrude more than inform. Put another way, animated captioning and kinetic typography hold the promise of embodying meaning and "'giving life' to texts" (Strapparava et al., 2007, p. 1724).

In spring 2016, I received a Humanities Center Fellowship from Texas Tech to study animated captioning. I argued in my proposal that there is much more we can be doing to complement the small body of research in this area. As a result, I’ve been pushing my skill level with Adobe After Effects to create open-captioned versions of a variety of television and movie clips. I've been primarily interested in addressing three hard problems in captioning:

- how to distinguish multiple speakers in the same scene. The standard options when multiple speakers are talking—either screen positioning or preceding hyphens—are severely limiting in fast-paced, back-and-forth contexts.

- how to signal sonic dimensionality along two main axes: near/far sounds and loud/quiet sounds. The standard caption track tends to flatten the soundscape, as all sounds tend to become equally "loud" on the caption track, a transformation of meaning I refer to as equalization.

- how to reinforce the meaning of sound effects, ambient sounds, and music.

This work is experimental and likely to be controversial. I plan to study a sample of my open-captioned clips with a wide range of viewers, including viewers who are deaf and hard-of-hearing.

[gz] In several discussions and emails, you have mentioned connecting or collaborating with Big Data style research and captions. Can you discuss this a bit more, give us an idea of what you’re thinking about?

[SZ] In my research and writing, I tend to start with a single example—a curious caption—and build up from there. In rhetorical studies, we might refer to this approach as emic or bottom up. I'm less concerned, at least initially, with how existing theoretical perspectives might make sense of captions as rhetorical artifacts. For example, my thinking about how captions manipulate time—and especially tell the future—started to come into focus after I watched the climactic final rescue scene in Taken (Morel, 2008) on DVD. With the help of the closed captions, and a single em dash at the end of one caption, I correctly guessed the moment when Liam Neeson would shoot the final bad guy. I had a lot of help in that moment, first and foremost from the conventions of the Hollywood action movie itself and the promise of a happy ending. I also got some help from the caption's early appearance on the screen and its staying power as it lingered on the screen at a very slow reading speed of 107 words per minute. In other words, I could read the bad guy's speech caption ("We can nego—") and process why he might not finish saying the word "negotiate" before he even uttered the line. The end-of-caption em dash denoted a major interruption, and what could be a bigger interruption than Neeson, with gun aimed at the bad guy's head, shooting him dead before he can finish making his plea to negotiate? (Warning: This clip is violent.)

I hadn't really considered the role of em dashes in captioning at this point, but this scene set in motion for me an exploration of a whole set of higher level concepts: end-of-caption punctuation (em dashes but also ellipses and the differences between them), reading speed, predictive reading, time-shifting and future telling in captioning, and so on. What began as a blog post about that climactic scene in Taken turned into a more sustained reflection on temporality in closed captioning over the next couple years. Examples began to accumulate, allowing me to make more general claims about the power of dots and dashes to tell the future. At some point, I considered captions in these and other examples to be a form of dramatic irony, coining the term captioned irony to explain the differences between listening (with captions turned off) and reading (with captions turned on). Chapter 5 of my book is titled "Captioned Irony" and explores these productive tensions, starting with the clues available in ending punctuation and moving on to other examples of irony in captioning: ironic identifications (e.g., when speaker identifiers and naming conventions give information away too soon) and ironic juxtapositions (e.g., when captions cross boundaries they shouldn't).

I brought dozens of examples to bear on my arguments about captioned irony as I scaled up, across, and back down the conceptual ladder. (By my count, there are 52 video clips to support Chapter 5.) But I didn't have access to a large corpus of official caption data, which is one of the pressing challenges in caption studies. The subtitle sharing sites such as Subscene.com are full of subtitle files (speech only) in dozens of languages but contain few accurate and true closed caption files (speech and nonspeech) and no way to search inside them. The few files that were marked as containing true closed captions on the sharing sites could not be counted on to match the official captions on the DVD versions in my possession. To address this problem, I set out to create my own small corpus of official DVD caption files. Note that a corpus of any size does not exist for outsiders/researchers who wish to study professional movie captioning. Creating a collection of searchable caption files wasn't my first goal. I originally needed a way to pinpoint the exact location of the captions that I had noted along the way. If I wanted to track the recurrence of a single nonspeech caption in a single movie (e.g., all the gasping in Twilight, all the grunting and screaming in Oblivion, all the guttural croaking in Aliens vs. Predator: Requiem), I needed official time-stamped caption files that I could run simple searches on.

To scale up beyond a single movie, I needed a corpus of official caption files, which I began to compile. But the process of extracting caption files from DVDs is time-consuming, involving at least three software programs (DVD ripper, DVD extractor, subtitle extractor). Because the captions are stored on DVDs as bitmap images, a subtitle extractor is needed that can recognize the shape of each letter or character (with help from the user) and copy them into a plain text file along with a caption number and timestamp. These caption files can be searched together to provide additional examples (e.g., every dog barking caption, every silence caption, every reference to a particular kind of music, etc.) but also to follow up on hunches. Because I have been primarily interested in nonspeech captions and nonspeech sounds, I was also interested in separating all the speech captions from the nonspeech captions, as well as isolating all of the captions containing speaker identifiers, because—well, I wasn't exactly sure at the time. I just knew that nonspeech captions held so much fascination for me. My older son wrote a simple program in Perl that made all this happen, with output to HTML tables that could be easily imported into Excel for coding and re-sorting. In other words, each movie caption file was separated into three tables with columns for caption number and timestamp: speaker IDs, nonspeech captions, and full caption file. Caption numbers in the tables were linked to the full caption file so each caption could be viewed in its original context. Search results were also presented in table format. Recently, he made this program publicly available (minus the copyrighted caption files) on GitHub.

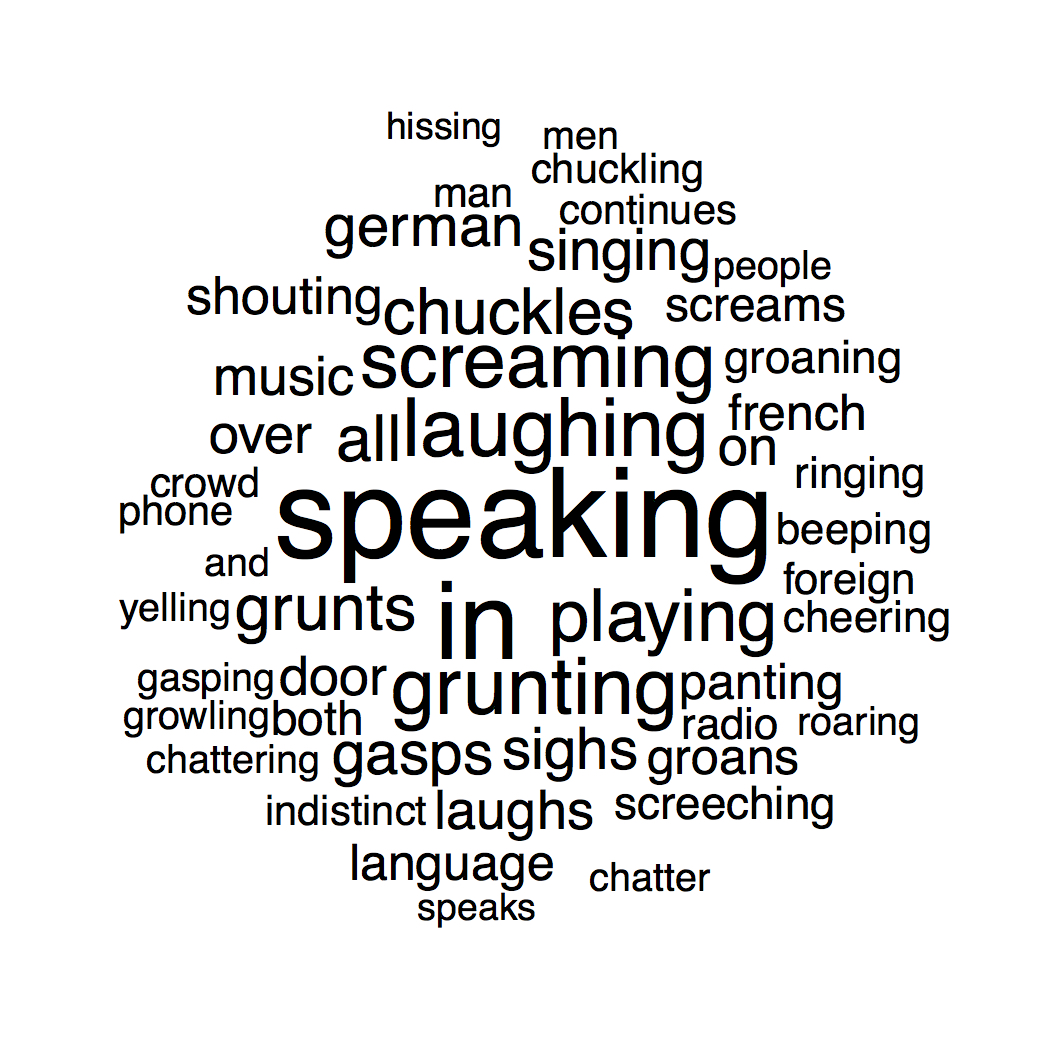

What's needed now is a much larger corpus of official caption files—thousands of files—that researchers can study from a variety of perspectives. Ideally, the corpus could be imported into a database and linked to the movies themselves in the spirit of an interactive transcript. We could also create a concordance out of our caption data. With my small set of caption data, I've created a concordance (using CasualConc) to explore the most popular and least popular (or unique) verbs for describing paralinguistic sounds. Nonspeech captions are typically built up around verbs. (The most popular nonspeech verbs in my corpus: speaks, laughs, grunts, screams, plays, chuckles, gasps, sighs. A few of the unique verbs in my corpus of nonspeech captions: tuts, tsks, churrs, sniggers, harrumphs, glugging.) Captioning advocates and researchers have never catalogued nonspeech verbs in this way, not even in a single movie. I'm excited by the possibilities. There's so much more we can do to understand closed captioning as a rhetorical text. Big data can complement, challenge, and extend this work.

Closing Comment

[gz] As the field of caption studies continues to emerge, what kinds of interdisciplinary collaborations do you anticipate? What kinds would you like to see happen? Since caption studies is an emerging field, what kind of emphases would you like to see?

[SZ] Caption studies is a large collection of approaches. In my book, I offer an open-ended definition: Caption studies is "a research program that that is deeply invested in questions of meaning at the interface of sound, writing, and accessibility" (Zdenek, 2015, p. 2). In other words, caption studies is an umbrella term for welcoming a wide array of questions and studies.

My own research tackles one aspect of caption studies—approaching it from the side of rhetorical studies and the humanities—but I am excited about other frameworks and methodologies. A list of emphases in caption studies might include the following:

- Literacy studies

- Big data studies

- Rhetorical/textual/film studies

- Style guidelines: Analysis and critique

- Advocacy and public outreach: Strategies and assessments

- Amateur/DIY captioning

- Experiments with animated and new forms of captioning

- Pedagogy/training/classroom studies (e.g., methods of teaching accessibility)

- Usability and User Experience studies (e.g., eye tracking studies, reading speed studies)

- Institutional studies/critiques

- Workplace studies of professional captioners

- The role of law and policy

- Social science research: Surveys, interviews, focus groups, case studies

- Software and hardware application and development

- Advances in live captioning

These emphases can be combined in productive ways. I'm particularly interested in collaborating with social science researchers who do eye-tracking research or create and manage databases. Eye-tracking studies in captioning research are rare. Perhaps the most well-known eye-tracking study by Carl Jensema, Ramalinga Sarma Danturthi, and Robert Burch (2000) was performed at a time we may not recognize or remember today. The Decoder Circuitry Act of 1990 (implemented in 1993) was only a few years old. Prior to this time, viewers need to buy a pricey set-top box (around $200) to watch captions on their televisions. Captioning was not yet prevalent on TV either. While 100% captioning was mandated by the Telecomm Act of 1996 for all new and nonexempt content, it would take a full decade (2006) for the law to be rolled out fully. Finally, broadband speeds and Internet video were not standard in the late 1990s. This important eye-tracking study, which found that viewers spend almost all of their time reading captions (between 82 and 86%), needs to be replicated with a tech savvy audience who have lived in the shadow of 100% television captioning and the Communications and Video Accessibility Act of 2010, which requires closed captioning on all TV content redistributed on the Internet. New eye-tracking studies might be combined with the arguments of rhetorical critics such as those I offer in Reading Sounds. Rather than an open-ended study of where users look on the screen and for how long, I can imagine eye-tracking studies that test claims about various facets of what I call captioned irony. For example, we could design studies of end-of-caption punctuation (em dashes and ellipses) and how viewers' eyes engage with them through different examples. Other studies could explore cultural literacy, showing viewers clips that reference sonic allusions in order to track their eye movements and test their understanding or knowledge of the meaning of such allusions.

![Screenshot of Hypnotoad from the Episode 'Bender Should Not Be Allowed on TV' on DVD with caption: [Eyebals Thrumming Loudly]](assets/hypnotoad-bendershouldnotbeallowedontv-dvd.jpg)